This post list steps for installing openfiler and creating iscsi targets that could be used as storage for Oracle DBs. It list steps for creating single path iscsi targets and multipath iscsi targets. Lastly it shows how to attach iscsi targets directly to VirtualBox.

Installing openfiler

Creating iSCSI Targets

Single Path iSCSI Target

Multipath iSCSI Target

Attach iSCSI Target to VirtualBox from Host

Installing openfiler

Download the latest openfiler software from

openfiler.comThe Openfiler software is installed on a VirtualBox. To install openfiler on VirtulBox add the downloaded ISO image to the CD-ROM and set the boot order such that CD-ROM is higher than hard disk. Two virtual disks are created in the VirtualBox (see image below). One is to install the openfiler software (OS) (openfiler.vdi) . Then another disk that will be used to create iscsi targets (scsidisk.vdi).

Make sure the disk used for openfiler OS install is larger than 8GB even though openfiler states 8GB is the minimum size needed. Even when disks is slightly larger than 8GB installation still fails.

Once the disks are in order boot from the openfiler ISO image. Screenshots below give highlights (not all the steps).

Only select the disk designated for installing the OS. Uncheck the disk designated to create iscsi targets.

Set the IPs for the openfiler setup. In this case static IP configuration has been done as oppose to DHCP. As there's only one NIC on the openfiler server, the iscsi targets will be single path. If multipath iscsi targets are required, add another NIC to the openfiler server. Refer

multipath iSCSI target step for more.

Once the installation is complete login to the web administration portal. The URL is listed at the end of the installation process.

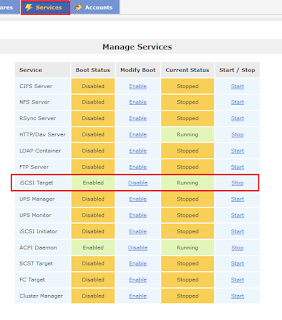

Login to the web console (default username is "openfiler" and password is "password") and go to services tab and enable and start iSCSI target.

Openfiler installation is complete and ready to create iscsi targets.

Creating iSCSI Targets

To create iscsi targets login to the web console if already not logged in. The iscsi targets created on openfiler server is attached on a server with hostname city7. In this case the city7 is the iscsi initiator.

In order for city7 server to access the iscsi target add the city7 to network access configuration by selecting system tab.

Create the iscsi target using the disk designated for it. This could be done by selecting volume tag followed by the block device link and finally clicking on the actual disk. In this case the disk used for iscsi target is /dev/sdb.

This will open the create partition page. The edit partition section will show 100% storage. On the create partition section select the required amount of storage (in this case 10GB was chosen) and create a physical partition by clicking on the create button.

New partition will be shown on the edit partition section. Even though 10GB was selected, created partition was smaller than 10GB.

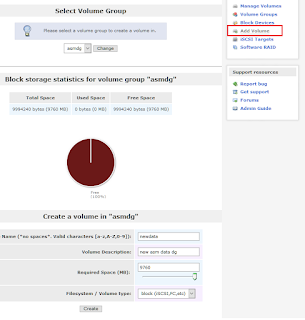

Create a volume group using the partition created earlier. In this case the volume group is named asmdg.

Once created new volume group will appear under volume group management section.

Next step is to create a volume within the volume group. For this select add volume link and create a volume of required size.

Once created the volume information will be listed under the associated volume group.

The next step is to create an iscsi target to the volume. This is done through the iscsi target link. Select target configuration tab and then creating a iSCSI Qualified Name (IQN) for the target. Below screenshot shows creating a IQN. Openfiler auto generate an IQN but it could be renamed as desired. In this case the IQN is

iqn.2006-01.com.openfiler:asm.newdata

Select LUN mapping tap and map the earlier created IQN to the LUN (volume). The LUN name is the description of the volume created earlier (inside orange box), not the name of the volume.

Once the mapping is complete.

Finally update the network access control list (ACL) by allowing access to the iscsi target. As mentioned earlier, the server that will be accessing the iscsi target is called city7.

There's no need to setup CHAP authentication unless password protection is needed for the iscsi targets.

Creating iscsi target is now complete. Next step is to consume the iscsi target.

Single Path iSCSI Target

The city7 server that will consume the iscsi target is running RHEL 7.4 (3.10.0-693.el7.x86_64). In order to access iscsi target install the following RPM

iscsi-initiator-utils-6.2.0.874-4.el7.x86_64

Have the iscsid service enabled. If the service is down it's auto started during the target discovery. Nevertheless, it's best to have it enabled so auto starts across reboots.

# systemctl enable iscsid.service

# systemctl list-unit-files | grep iscsid | grep enable

iscsid.service enabled

systemctl status iscsid

â iscsid.service - Open-iSCSI

Loaded: loaded (/usr/lib/systemd/system/iscsid.service; enabled; vendor preset: disabled)

Active: active (running)

Next step is to discover the iscsi target

# iscsiadm -m discovery -t st -p 192.168.0.94 (or long form iscsiadm -m discovery -t sendtargets -p 192.168.0.94)

192.168.0.94:3260,1 iqn.2006-01.com.openfiler:asm.newdata

If for some reason the discovery fails as below then check the network access configuration and network ACL. The network/hostname must be considered in conjunction with the netmask.

# iscsiadm -m discovery -t st -p 192.168.0.94

iscsiadm: No portals found

To confirm this is down to network issue comment out the /etc/initiators.allow and /etc/initiators.deny entries and try again (this is an auto generated file and not advised to manually edit). For example if the IP of the iscsi initiator (city7 in this case) is 192.168.0.85 and netmask was set to 255.255.255.0 (instead of 255.255.255.255 which will restrict access to all but just one IP) then /etc/initiators.allow entry would be

iqn.2006-01.com.openfiler:asm.newdata 192.168.0.85/24. This is not what's expected and could result in iscsi discovery failure.

If the targets are discovered without any issue then manually login to the target

# iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.94 -l

Logging in to [iface: default, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260] (multiple)

Login to [iface: default, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260] successful.

This will show a new disk in the scsi initiator server (city7).

ls -l /dev/disk/by-path/

lrwxrwxrwx. 1 root root 9 Mar 22 15:23 ip-192.168.0.94:3260-iscsi-iqn.2006-01.com.openfiler:asm.newdata-lun-0 -> ../../sdd

fdisk will now show a new disk as a result of the iscsi target

fdisk -l

...

Disk /dev/sdd: 10.2 GB, 10234101760 bytes, 19988480 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Run the following so that iscsi initiator auto logins to the target accrsso reboots

iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.94 --op update -n node.startup -v automatic

If for some reason the auto login need to be changed to manual login use the following command

iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.88 --op update -n node.startup -v manaul

Since target discovery and login is working fine, create a partition on the new disk and a appropriate

udev rule.fdisk /dev/sdd

fdisk -l

Disk /dev/sdd: 10.2 GB, 10234101760 bytes, 19988480 sectors

...

Device Boot Start End Blocks Id System

/dev/sdd1 2048 19988479 9993216 83 Linux

To create the udev rule get the scsi id of the disk

/usr/lib/udev/scsi_id -g -u -d /dev/sdd

14f504e46494c4552484a535350652d4c774b6f2d5a597173

With the scsi id in hand create the udev rule for Oracle ASM with role separation as below

KERNEL=="sd?1",SUBSYSTEM=="block",PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent",RESULT=="14f504e46494c4552484a535350652d4c774b6f2d5a597173",SYMLINK+="oracleasm/ndata1",OWNER="grid", GROUP="asmadmin", MODE="0660"

Run the udev rules and check new udev taking effect

ls -l /dev/oracleasm/*

lrwxrwxrwx. 1 root root 7 Mar 22 15:44 /dev/oracleasm/data1 -> ../sdb1

lrwxrwxrwx. 1 root root 7 Mar 22 15:44 /dev/oracleasm/fra1 -> ../sdc1

# udevadm trigger --type=devices --action=change

# ls -l /dev/oracleasm/*

lrwxrwxrwx. 1 root root 7 Mar 22 15:45 /dev/oracleasm/data1 -> ../sdb1

lrwxrwxrwx. 1 root root 7 Mar 22 15:45 /dev/oracleasm/fra1 -> ../sdc1

lrwxrwxrwx. 1 root root 7 Mar 22 15:45 /dev/oracleasm/ndata1 -> ../sdd1

Next step is to

add the disk to ASM. This would conclude the iscsi single path setup. For completion of the post following two commands are also mentioned.

To logout of a target use the following command

# iscsiadm -m node -u -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.94

Logging out of session [sid: 2, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260]

Logout of [sid: 2, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260] successful.

To delete a target use the following command. This will only remove the entry on the iscsi initiator, not the actual iscsi target.

# iscsiadm -m node -o delete -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.94

Multipath iSCSI Target

Having a single network path to the iscsi target creates a single point of failure. The solution is to have multiple network paths (preferably from different network segments without having any common hardware. i.e NIC, switches etc) to the same iscsi target. Following diagram shows the multipath configuration between iscsi target (openfiler) and the iscsi initiator (city7).

The same iscsi target is exposed via IPs 192.168.0.94 and 192.168.1.88. These could be configured during the opefiler installation or later on.

The iscsi initiator also has two NICs with IPs 192.168.0.85 and 192.168.1.85 capable of accessing the iscsi target through different network path. Once two NICs are configured enable network access through open filer web console. This need to happen on network access configuration (under system tab)

and network ACL (volume -> iscsi targets).

Apart from these changes (having multiple NICs) there's no other changes on the iscsi target.

On the iscsi initiator install multipath software in addition to the iscsi software mentioned in

singe path iscsi creation.

device-mapper-multipath-libs-0.4.9-111.el7.x86_64.rpm

device-mapper-multipath-0.4.9-111.el7.x86_64.rpm

Enable the multipath service and start it along with the iscsid service if iscsid is not already started.

systemctl enable multipathd.service

systemctl start multipathd.service

systemctl status multipathd.service

If /etc/multipath.conf file doesn't exists create a one from the provided template using the following command.

mpathconf --enable

This will create an multipath.conf file with all the section either commented or empty. Add following to default section and blacklist section. Among other things the default section tells multipathing to use user friendly names and round robin as the path policy.

cat /etc/multipath.conf | grep -A5 defaults

defaults {

find_multipaths yes

user_friendly_names yes

failback immediate

path_selector "round-robin 0"

}Add the following to the blacklist section

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^hd[a-z]"

devnode "^cciss!c[0-9]d[0-9]*[p[0-9]*]"

}Next login to the iscsi targets using both paths.

iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.94 -l

Logging in to [iface: default, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260] (multiple)

Login to [iface: default, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260] successful.

iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.1.88 -l

Logging in to [iface: default, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.1.88,3260] (multiple)

Login to [iface: default, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.1.88,3260] successful.

If login is successful set auto login for both paths

# iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.0.94 --op update -n node.startup -v automatic

# iscsiadm -m node -T iqn.2006-01.com.openfiler:asm.newdata -p 192.168.1.88 --op update -n node.startup -v automatic

Check multipath device are active

mpathf (14f504e46494c4552484a535350652d4c774b6f2d5a597173) dm-0 OPNFILER,VIRTUAL-DISK

size=9.5G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=1 status=active

| `- 11:0:0:0 sde 8:64 active ready running

`-+- policy='round-robin 0' prio=1 status=enabled

`- 10:0:0:0 sdd 8:48 active ready running

Using disk path it is possible to find out to which path the sd* device belong to

ls -l /dev/disk/by-path/

... ip-192.168.0.94:3260-iscsi-iqn.2006-01.com.openfiler:asm.newdata-lun-0 -> ../../sdd

... ip-192.168.1.88:3260-iscsi-iqn.2006-01.com.openfiler:asm.newdata-lun-0 -> ../../sde

Next step is to create a user friendly name for the multipath device. To do this use WWID (World Wide Identifier) of the mpath device (1365511.1).

/usr/lib/udev/scsi_id -g -u -d /dev/mapper/mpathf

14f504e46494c4552484a535350652d4c774b6f2d5a597173

Add a alias to the multipath device using the WWID obtained by adding following to the /etc/multipath.conf file.

multipaths {

multipath {

wwid 14f504e46494c4552484a535350652d4c774b6f2d5a597173

alias oradata

path_grouping_policy failover

}

ls -l /dev/mapper

lrwxrwxrwx. 1 root root 7 Mar 29 10:54 mpathf -> ../dm-0Restart the multipath service. Check the mapper directory again to see multipath device name being set to alias specified on the multipath.conf.

systemctl reload multipathd

ls -l /dev/mapper

lrwxrwxrwx. 1 root root 7 Mar 29 10:55 oradata -> ../dm-0

Run fdisk to partition the device (470913.1).This will create a partition in the mapper directory

fdisk /dev/mapper/oradata

/sbin/partprobe

ls -l /dev/mapper

lrwxrwxrwx. 1 root root 7 Mar 29 11:01 oradata -> ../dm-0

lrwxrwxrwx. 1 root root 7 Mar 29 11:01 oradata1 -> ../dm-1

To create a udev rule to set appropriate ownership for the partition use the DM_UUID value. This could be obtained by following command

udevadm info --query=all --name=/dev/mapper/oradata1

...

E: DM_UUID=part1-mpath-14f504e46494c4552484a535350652d4c774b6f2d5a597173

...

Create a udev rule as below using the obtained DM_UUID

KERNEL=="dm-*",ENV{DM_UUID}=="part1-mpath-14f504e46494c4552484a535350652d4c774b6f2d5a597173",OWNER="grid",GROUP="asmadmin",MODE="0660"Reload the udev rules and verify permission change

ls -l /dev/dm-1

brw-rw----. 1 root disk 253, 1 Mar 29 11:01 /dev/dm-1

/sbin/udevadm control --reload-rules

/sbin/udevadm trigger --type=devices --action=change

ls -l /dev/dm-1

brw-rw----. 1 grid asmadmin 253, 1 Mar 29 11:09 /dev/dm-1

Finally verify multipath is working by downing one of the NICs at the iscsi target. As shown earlier eth0 has the IP 192.168.0.94 (sdd) and eth1 has 192.168.1.88 (sde). Current multipath status is as below

multipath -ll

oradata (14f504e46494c4552484a535350652d4c774b6f2d5a597173) dm-0 OPNFILER,VIRTUAL-DISK

size=9.5G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=1 status=active

| `- 12:0:0:0 sdd 8:48 active ready running

`-+- policy='round-robin 0' prio=1 status=enabled

`- 13:0:0:0 sde 8:64 active ready running

To test one path stop the eth0 on iscsi target(opernfiler server)

ifdown eth0

Check the current multipath topology.

oradata (14f504e46494c4552484a535350652d4c774b6f2d5a597173) dm-0 OPNFILER,VIRTUAL-DISK

size=9.5G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=0 status=enabled

| `- 12:0:0:0 sdd 8:48 failed faulty running

`-+- policy='round-robin 0' prio=1 status=active

`- 13:0:0:0 sde 8:64 active ready running

The path through 192.168.0.94 (sdd) has become failed path through sde has become active. To check the other path start the downed NIC and wait for iscsi path to become active ready and then down the eth1.

openfiler ~]# ifup eth0

city7 ~]# multipath -ll

oradata (14f504e46494c4552484a535350652d4c774b6f2d5a597173) dm-0 OPNFILER,VIRTUAL-DISK

size=9.5G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=1 status=active

| `- 12:0:0:0 sdd 8:48 active ready running

`-+- policy='round-robin 0' prio=1 status=enabled

`- 13:0:0:0 sde 8:64 active ready running

openfiler ~]# ifdown eth1

city7 ~]# multipath -ll

oradata (14f504e46494c4552484a535350652d4c774b6f2d5a597173) dm-0 OPNFILER,VIRTUAL-DISK

size=9.5G features='0' hwhandler='0' wp=rw

|-+- policy='round-robin 0' prio=1 status=active

| `- 12:0:0:0 sdd 8:48 active ready running

`-+- policy='round-robin 0' prio=0 status=enabled

`- 13:0:0:0 sde 8:64 failed faulty running

This concludes the using multipath iscsi targets.

If during testing and setup want to logout from all iscsi target paths at once use the following (this will stop multipath working)

iscsiadm -m node -u

Logging out of session [sid: 6, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260]

Logging out of session [sid: 7, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.1.88,3260]

Logout of [sid: 6, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.0.94,3260] successful.

Logout of [sid: 7, target: iqn.2006-01.com.openfiler:asm.newdata, portal: 192.168.1.88,3260] successful.

Attach iSCSI Target to VirtualBox from HostIn the previous cases the iscsi target was attached to the Virtualbox while logged in to the VM. However it is possible to attach the iscsi target to the virtualbox VM from the host server. This is done using the VBoxManage command. Before running the command make sure host server is granted access to the iscsi target.

VBoxManage storageattach city7 --storagectl "SATA" --port 3 --device 0 --type hdd --medium iscsi --server 192.168.0.94 --target "iqn.2006-01.com.openfiler:asm.newdata" --tport 3260

iSCSI disk created. UUID: bc380eb5-e67b-49b7-9602-efbc5d6d8e87

This will be visible on the VirtualBox GUI.

When the VM is booted the iscsi target would be available as a standard block device (/dev/sd*).

To remove the iscsi from the VM run the following

VBoxManage storageattach city7 --storagectl "SATA" --port 3 --device 0 --medium none

This however doesn't remove the iscis target from virtual media manager only from the VM. It will remain an unattached device in the virtual media manager.

As such subsequent attaching of the iscsi target will fail with UUID already exists error.

VBoxManage storageattach city7 --storagectl "SATA" --port 3 --device 0 --type hdd --medium iscsi --server 192.168.0.94 --target "iqn.2006-01.com.openfiler:asm.newdata" --tport 3260

VBoxManage: error: Cannot register the hard disk '192.168.0.94|iqn.2006-01.com.openfiler:asm.newdata' {a866eaf1-f089-4a8d-b793-23028e3a1e14} because a hard disk '192.168.0.94|iqn.2006-01.com.openfiler:asm.newdata'

with UUID {bc380eb5-e67b-49b7-9602-efbc5d6d8e87} already exists

VBoxManage: error: Details: code NS_ERROR_INVALID_ARG (0x80070057), component VirtualBoxWrap, interface IVirtualBox, callee nsISupports

VBoxManage: error: Context: "CreateMedium(Bstr("iSCSI").raw(), bstrISCSIMedium.raw(), AccessMode_ReadWrite, DeviceType_HardDisk, pMedium2Mount.asOutParam())" at line 608 of file VBoxManageStorageController.cppTo fix this run the close medium command providing the UUID. This will remove the iscsi target from the virtual manager allowing the iscsi target to be attached again.

VBoxManage closemedium bc380eb5-e67b-49b7-9602-efbc5d6d8e87

This concludes using openfiler to create iSCSI targets on VirtualBox.